k8s 课程规划

第六章 Pod控制器详解 本章节主要介绍各种Pod控制器的详细使用。

Pod控制器介绍 Pod是kubernetes的最小管理单元,在kubernetes中,按照pod的创建方式可以将其分为两类:

什么是Pod控制器

Pod控制器是管理pod的中间层,使用Pod控制器之后,只需要告诉Pod控制器,想要多少个什么样的Pod就可以了,它会创建出满足条件的Pod并确保每一个Pod资源处于用户期望的目标状态。如果Pod资源在运行中出现故障,它会基于指定策略重新编排Pod。

在kubernetes中,有很多类型的pod控制器,每种都有自己的适合的场景,常见的有下面这些:

ReplicationController:比较原始的pod控制器,已经被废弃,由ReplicaSet替代

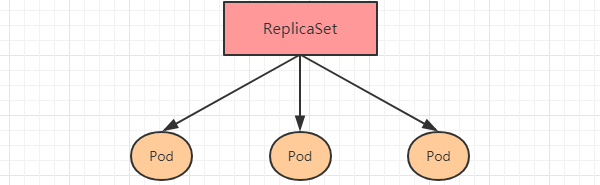

ReplicaSet:保证副本数量一直维持在期望值,并支持pod数量扩缩容,镜像版本升级

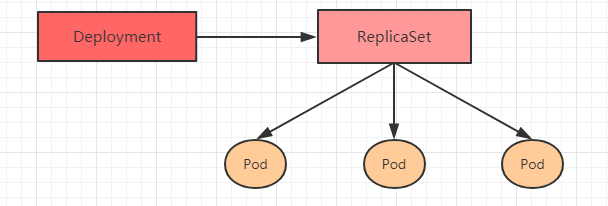

Deployment:通过控制ReplicaSet来控制Pod,并支持滚动升级、回退版本

Horizontal Pod Autoscaler:可以根据集群负载自动水平调整Pod的数量,实现削峰填谷

DaemonSet:在集群中的指定Node上运行且仅运行一个副本,一般用于守护进程类的任务

Job:它创建出来的pod只要完成任务就立即退出,不需要重启或重建,用于执行一次性任务

Cronjob:它创建的Pod负责周期性任务控制,不需要持续后台运行

StatefulSet:管理有状态应用

ReplicaSet(RS) ReplicaSet的主要作用是保证一定数量的pod正常运行 ,它会持续监听这些Pod的运行状态,一旦Pod发生故障,就会重启或重建。同时它还支持对pod数量的扩缩容和镜像版本的升降级。

ReplicaSet的资源清单文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 apiVersion: apps/v1 kind: ReplicaSet metadata: name: namespace: labels: controller: rs spec: replicas: 3 selector: matchLabels: app: nginx-pod matchExpressions: - {key: app , operator: In , values: [nginx-pod ]} template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1 ports: - containerPort: 80

在这里面,需要新了解的配置项就是spec下面几个选项:

replicas:指定副本数量,其实就是当前rs创建出来的pod的数量,默认为1

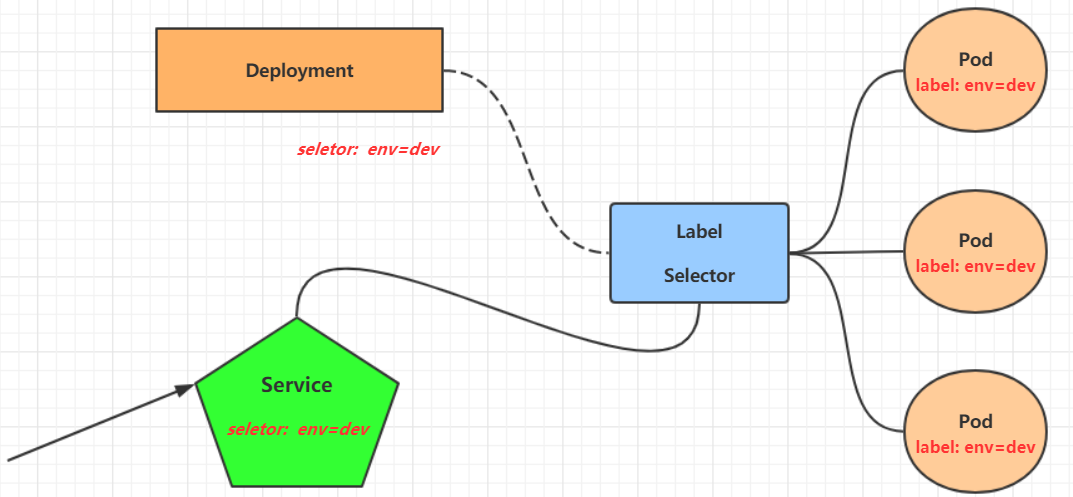

selector:选择器,它的作用是建立pod控制器和pod之间的关联关系,采用的Label Selector机制

在pod模板上定义label,在控制器上定义选择器,就可以表明当前控制器能管理哪些pod了

template:模板,就是当前控制器创建pod所使用的模板板,里面其实就是前一章学过的pod的定义

创建ReplicaSet

创建pc-replicaset.yaml文件,内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: apps/v1 kind: ReplicaSet metadata: name: pc-replicaset namespace: dev spec: replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root @master ~] replicaset.apps/pc-replicaset created [root @master ~] NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR pc-replicaset 3 3 3 22 s nginx nginx:1.17 .1 app=nginx-pod [root @master ~] NAME READY STATUS RESTARTS AGE pc-replicaset -6vmvt 1 /1 Running 0 54 s pc-replicaset -fmb8f 1 /1 Running 0 54 s pc-replicaset -snrk2 1 /1 Running 0 54 s

扩缩容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 [root @master ~] replicaset.apps/pc-replicaset edited [root @master ~] NAME READY STATUS RESTARTS AGE pc-replicaset -6vmvt 1 /1 Running 0 114 m pc-replicaset -cftnp 1 /1 Running 0 10 s pc-replicaset -fjlm6 1 /1 Running 0 10 s pc-replicaset -fmb8f 1 /1 Running 0 114 m pc-replicaset -s2whj 1 /1 Running 0 10 s pc-replicaset -snrk2 1 /1 Running 0 114 m [root @master ~] replicaset.apps/pc-replicaset scaled [root @master ~] NAME READY STATUS RESTARTS AGE pc-replicaset -6vmvt 0 /1 Terminating 0 118 m pc-replicaset -cftnp 0 /1 Terminating 0 4 m17s pc-replicaset -fjlm6 0 /1 Terminating 0 4 m17s pc-replicaset -fmb8f 1 /1 Running 0 118 m pc-replicaset -s2whj 0 /1 Terminating 0 4 m17s pc-replicaset -snrk2 1 /1 Running 0 118 m [root @master ~] NAME READY STATUS RESTARTS AGE pc-replicaset -fmb8f 1 /1 Running 0 119 m pc-replicaset -snrk2 1 /1 Running 0 119 m

镜像升级

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root @master ~] replicaset.apps/pc-replicaset edited [root @master ~] NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES ... pc-replicaset 2 2 2 140 m nginx nginx:1.17 .2 ... [root @master ~] replicaset.apps/pc-replicaset image updated [root @master ~] NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES ... pc-replicaset 2 2 2 145 m nginx nginx:1.17 .1 ...

删除ReplicaSet

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root @master ~] replicaset.apps "pc-replicaset" deleted [root @master ~] No resources found in dev namespace. [root @master ~] replicaset.apps "pc-replicaset" deleted [root @master ~] NAME READY STATUS RESTARTS AGE pc-replicaset -cl82j 1 /1 Running 0 75 s pc-replicaset -dslhb 1 /1 Running 0 75 s [root @master ~] replicaset.apps "pc-replicaset" deleted

Deployment(Deploy) 为了更好的解决服务编排的问题,kubernetes在V1.2版本开始,引入了Deployment控制器。值得一提的是,这种控制器并不直接管理pod,而是通过管理ReplicaSet来简介管理Pod,即:Deployment管理ReplicaSet,ReplicaSet管理Pod。所以Deployment比ReplicaSet功能更加强大。

Deployment主要功能有下面几个:

支持ReplicaSet的所有功能

支持发布的停止、继续

支持滚动升级和回滚版本

Deployment的资源清单文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 apiVersion: apps/v1 kind: Deployment metadata: name: namespace: labels: controller: deploy spec: replicas: 3 revisionHistoryLimit: 3 paused: false progressDeadlineSeconds: 600 strategy: type: RollingUpdate rollingUpdate: maxSurge: 30 % maxUnavailable: 30 % selector: matchLabels: app: nginx-pod matchExpressions: - {key: app , operator: In , values: [nginx-pod ]} template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1 ports: - containerPort: 80

创建deployment

创建pc-deployment.yaml,内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: apps/v1 kind: Deployment metadata: name: pc-deployment namespace: dev spec: replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 [root @master ~] deployment.apps/pc-deployment created [root @master ~] NAME READY UP-TO -DATE AVAILABLE AGE pc-deployment 3 /3 3 3 15 s [root @master ~] NAME DESIRED CURRENT READY AGE pc-deployment -6696798b78 3 3 3 23 s [root @master ~] NAME READY STATUS RESTARTS AGE pc-deployment -6696798b78 -d2c8n 1 /1 Running 0 107 s pc-deployment -6696798b78 -smpvp 1 /1 Running 0 107 s pc-deployment -6696798b78 -wvjd8 1 /1 Running 0 107 s

扩缩容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 [root @master ~] deployment.apps/pc-deployment scaled [root @master ~] NAME READY UP-TO -DATE AVAILABLE AGE pc-deployment 5 /5 5 5 2 m [root @master ~] NAME READY STATUS RESTARTS AGE pc-deployment -6696798b78 -d2c8n 1 /1 Running 0 4 m19s pc-deployment -6696798b78 -jxmdq 1 /1 Running 0 94 s pc-deployment -6696798b78 -mktqv 1 /1 Running 0 93 s pc-deployment -6696798b78 -smpvp 1 /1 Running 0 4 m19s pc-deployment -6696798b78 -wvjd8 1 /1 Running 0 4 m19s [root @master ~] deployment.apps/pc-deployment edited [root @master ~] NAME READY STATUS RESTARTS AGE pc-deployment -6696798b78 -d2c8n 1 /1 Running 0 5 m23s pc-deployment -6696798b78 -jxmdq 1 /1 Running 0 2 m38s pc-deployment -6696798b78 -smpvp 1 /1 Running 0 5 m23s pc-deployment -6696798b78 -wvjd8 1 /1 Running 0 5 m23s

镜像更新

deployment支持两种更新策略:重建更新和滚动更新,可以通过strategy指定策略类型,支持两个属性:

1 2 3 4 5 6 7 strategy:指定新的Pod替换旧的Pod的策略, 支持两个属性: type:指定策略类型,支持两种策略 Recreate:在创建出新的Pod之前会先杀掉所有已存在的Pod RollingUpdate:滚动更新,就是杀死一部分,就启动一部分,在更新过程中,存在两个版本Pod rollingUpdate:当type为RollingUpdate时生效,用于为RollingUpdate设置参数,支持两个属性: maxUnavailable:用来指定在升级过程中不可用Pod的最大数量,默认为25%。 maxSurge: 用来指定在升级过程中可以超过期望的Pod的最大数量,默认为25%。

重建更新

编辑pc-deployment.yaml,在spec节点下添加更新策略

1 2 3 spec: strategy: type: Recreate

创建deploy进行验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [root @master ~] deployment.apps/pc-deployment image updated [root @master ~] NAME READY STATUS RESTARTS AGE pc-deployment -5d89bdfbf9 -65qcw 1 /1 Running 0 31 s pc-deployment -5d89bdfbf9 -w5nzv 1 /1 Running 0 31 s pc-deployment -5d89bdfbf9 -xpt7w 1 /1 Running 0 31 s pc-deployment -5d89bdfbf9 -xpt7w 1 /1 Terminating 0 41 s pc-deployment -5d89bdfbf9 -65qcw 1 /1 Terminating 0 41 s pc-deployment -5d89bdfbf9 -w5nzv 1 /1 Terminating 0 41 s pc-deployment -675d469f8b -grn8z 0 /1 Pending 0 0 s pc-deployment -675d469f8b -hbl4v 0 /1 Pending 0 0 s pc-deployment -675d469f8b -67nz2 0 /1 Pending 0 0 s pc-deployment -675d469f8b -grn8z 0 /1 ContainerCreating 0 0 s pc-deployment -675d469f8b -hbl4v 0 /1 ContainerCreating 0 0 s pc-deployment -675d469f8b -67nz2 0 /1 ContainerCreating 0 0 s pc-deployment -675d469f8b -grn8z 1 /1 Running 0 1 s pc-deployment -675d469f8b -67nz2 1 /1 Running 0 1 s pc-deployment -675d469f8b -hbl4v 1 /1 Running 0 2 s

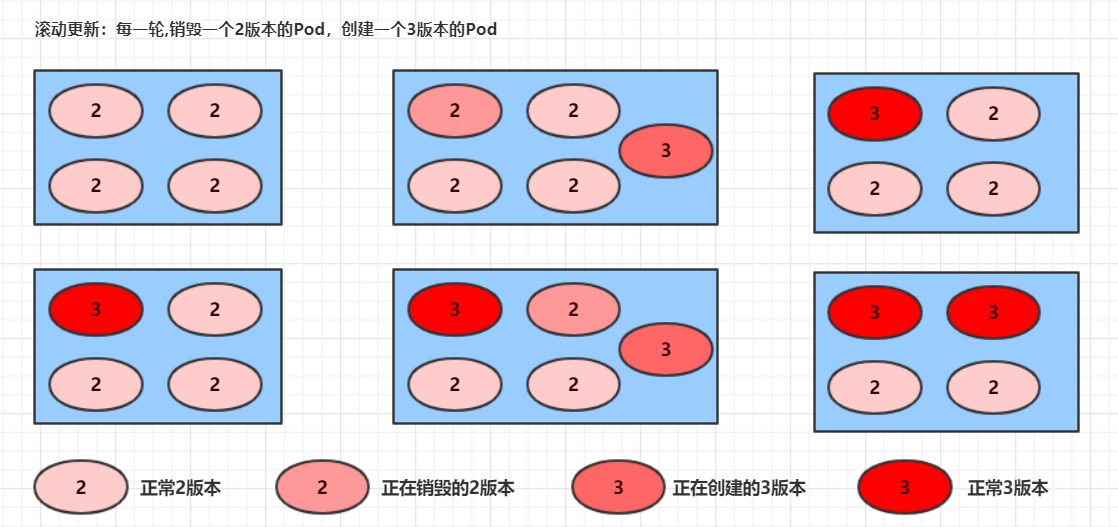

滚动更新

编辑pc-deployment.yaml,在spec节点下添加更新策略

1 2 3 4 5 6 spec: strategy: type: RollingUpdate rollingUpdate: maxSurge: 25 % maxUnavailable: 25 %

创建deploy进行验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 [root @master ~] deployment.apps/pc-deployment image updated [root @master ~] NAME READY STATUS RESTARTS AGE pc-deployment -c848d767 -8rbzt 1 /1 Running 0 31 m pc-deployment -c848d767 -h4p68 1 /1 Running 0 31 m pc-deployment -c848d767 -hlmz4 1 /1 Running 0 31 m pc-deployment -c848d767 -rrqcn 1 /1 Running 0 31 m pc-deployment -966bf7f44 -226rx 0 /1 Pending 0 0 s pc-deployment -966bf7f44 -226rx 0 /1 ContainerCreating 0 0 s pc-deployment -966bf7f44 -226rx 1 /1 Running 0 1 s pc-deployment -c848d767 -h4p68 0 /1 Terminating 0 34 m pc-deployment -966bf7f44 -cnd44 0 /1 Pending 0 0 s pc-deployment -966bf7f44 -cnd44 0 /1 ContainerCreating 0 0 s pc-deployment -966bf7f44 -cnd44 1 /1 Running 0 2 s pc-deployment -c848d767 -hlmz4 0 /1 Terminating 0 34 m pc-deployment -966bf7f44 -px48p 0 /1 Pending 0 0 s pc-deployment -966bf7f44 -px48p 0 /1 ContainerCreating 0 0 s pc-deployment -966bf7f44 -px48p 1 /1 Running 0 0 s pc-deployment -c848d767 -8rbzt 0 /1 Terminating 0 34 m pc-deployment -966bf7f44 -dkmqp 0 /1 Pending 0 0 s pc-deployment -966bf7f44 -dkmqp 0 /1 ContainerCreating 0 0 s pc-deployment -966bf7f44 -dkmqp 1 /1 Running 0 2 s pc-deployment -c848d767 -rrqcn 0 /1 Terminating 0 34 m

滚动更新的过程:

镜像滚动更新中rs的变化:

1 2 3 4 5 6 7 [root @master ~] NAME DESIRED CURRENT READY AGE pc-deployment -6696798b78 0 0 0 7 m37s pc-deployment -6696798b11 0 0 0 5 m37s pc-deployment -c848d76789 4 4 4 72 s

版本回退

deployment支持版本升级过程中的暂停、继续功能以及版本回退等诸多功能,下面具体来看.

kubectl rollout: 版本升级相关功能,支持下面的选项:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 [root @master ~] deployment "pc-deployment" successfully rolled out [root @master ~] deployment.apps/pc-deployment REVISION CHANGE-CAUSE 1 kubectl create --filename =pc-deployment .yaml --record =true2 kubectl create --filename =pc-deployment .yaml --record =true3 kubectl create --filename =pc-deployment .yaml --record =true[root @master ~] deployment.apps/pc-deployment rolled back [root @master ~] NAME READY UP-TO -DATE AVAILABLE AGE CONTAINERS IMAGES pc-deployment 4 /4 4 4 74 m nginx nginx:1.17 .1 [root @master ~] NAME DESIRED CURRENT READY AGE pc-deployment -6696798b78 4 4 4 78 m pc-deployment -966bf7f44 0 0 0 37 m pc-deployment -c848d767 0 0 0 71 m

金丝雀发布

Deployment控制器支持控制更新过程中的控制,如“暂停(pause)”或“继续(resume)”更新操作。

比如有一批新的Pod资源创建完成后立即暂停更新过程,此时,仅存在一部分新版本的应用,主体部分还是旧的版本。然后,再筛选一小部分的用户请求路由到新版本的Pod应用,继续观察能否稳定地按期望的方式运行。确定没问题之后再继续完成余下的Pod资源滚动更新,否则立即回滚更新操作。这就是所谓的金丝雀发布。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 [root @master ~] deployment.apps/pc-deployment image updated deployment.apps/pc-deployment paused [root @master ~] Waiting for deployment "pc-deployment" rollout to finish: 2 out of 4 new replicas have been updated... [root @master ~] NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES pc-deployment -5d89bdfbf9 3 3 3 19 m nginx nginx:1.17 .1 pc-deployment -675d469f8b 0 0 0 14 m nginx nginx:1.17 .2 pc-deployment -6c9f56fcfb 2 2 2 3 m16s nginx nginx:1.17 .4 [root @master ~] NAME READY STATUS RESTARTS AGE pc-deployment -5d89bdfbf9 -rj8sq 1 /1 Running 0 7 m33s pc-deployment -5d89bdfbf9 -ttwgg 1 /1 Running 0 7 m35s pc-deployment -5d89bdfbf9 -v4wvc 1 /1 Running 0 7 m34s pc-deployment -6c9f56fcfb -996rt 1 /1 Running 0 3 m31s pc-deployment -6c9f56fcfb -j2gtj 1 /1 Running 0 3 m31s [root @master ~] deployment.apps/pc-deployment resumed [root @master ~] NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES pc-deployment -5d89bdfbf9 0 0 0 21 m nginx nginx:1.17 .1 pc-deployment -675d469f8b 0 0 0 16 m nginx nginx:1.17 .2 pc-deployment -6c9f56fcfb 4 4 4 5 m11s nginx nginx:1.17 .4 [root @master ~] NAME READY STATUS RESTARTS AGE pc-deployment -6c9f56fcfb -7bfwh 1 /1 Running 0 37 s pc-deployment -6c9f56fcfb -996rt 1 /1 Running 0 5 m27s pc-deployment -6c9f56fcfb -j2gtj 1 /1 Running 0 5 m27s pc-deployment -6c9f56fcfb -rf84v 1 /1 Running 0 37 s

删除Deployment

1 2 3 [root @master ~] deployment.apps "pc-deployment" deleted

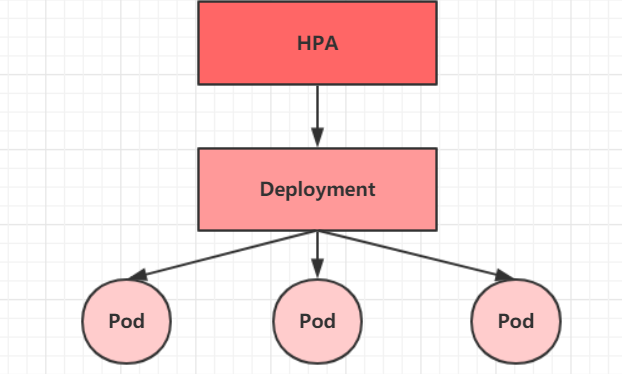

Horizontal Pod Autoscaler(HPA) 在前面的课程中,我们已经可以实现通过手工执行kubectl scale命令实现Pod扩容或缩容,但是这显然不符合Kubernetes的定位目标–自动化、智能化。 Kubernetes期望可以实现通过监测Pod的使用情况,实现pod数量的自动调整,于是就产生了Horizontal Pod Autoscaler(HPA)这种控制器。

HPA可以获取每个Pod利用率,然后和HPA中定义的指标进行对比,同时计算出需要伸缩的具体值,最后实现Pod的数量的调整。其实HPA与之前的Deployment一样,也属于一种Kubernetes资源对象,它通过追踪分析RC控制的所有目标Pod的负载变化情况,来确定是否需要针对性地调整目标Pod的副本数,这是HPA的实现原理。

接下来,我们来做一个实验

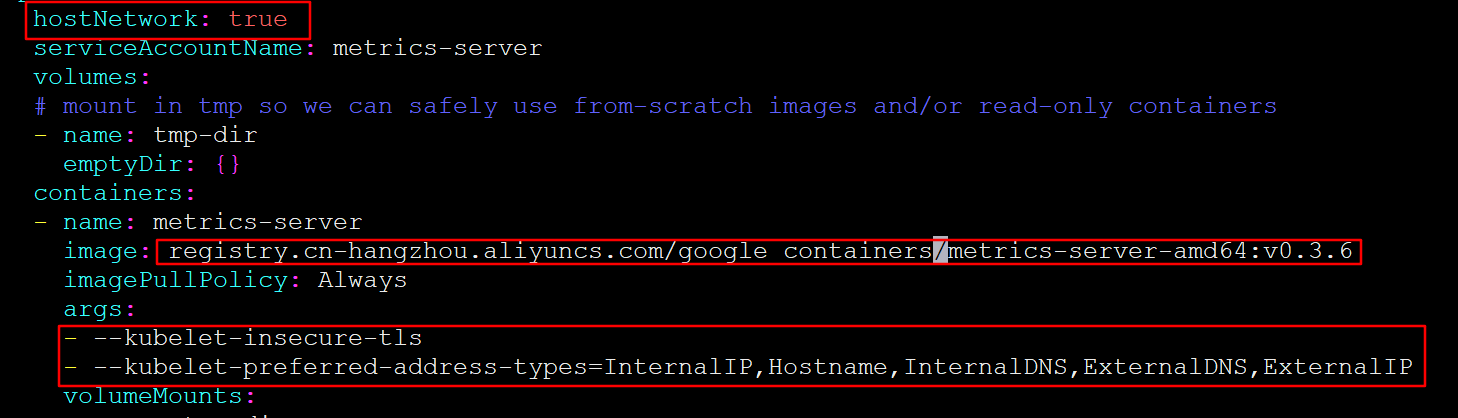

1 安装metrics-server

metrics-server可以用来收集集群中的资源使用情况

1 2 3 4 5 6 7 8 9 10 11 12 13 [root @master ~] [root @master ~] [root @master ~] [root @master 1.8 +] 按图中添加下面选项 hostNetwork: true image: registry.cn-hangzhou .aliyuncs.com/google_containers/metrics-server -amd64 :v0.3.6 args: - --kubelet -insecure -tls - --kubelet -preferred -address -types =InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root @master 1.8 +] [root @master 1.8 +] metrics-server -6b976979db -2xwbj 1 /1 Running 0 90 s [root @master 1.8 +] NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 98 m 4 % 1067 Mi 62 % node1 27 m 1 % 727 Mi 42 % node2 34 m 1 % 800 Mi 46 % [root @master 1.8 +] NAME CPU(cores) MEMORY(bytes) coredns-6955765f44 -7ptsb 3 m 9 Mi coredns-6955765f44 -vcwr5 3 m 8 Mi etcd-master 14 m 145 Mi ...

2 准备deployment和servie

为了操作简单,直接使用命令

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root @master 1.8 +] [root @master 1.8 +] [root @master 1.8 +] NAME READY UP-TO -DATE AVAILABLE AGE deployment.apps/nginx 1 /1 1 1 47 s NAME READY STATUS RESTARTS AGE pod/nginx-7df9756ccc -bh8dr 1 /1 Running 0 47 s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/nginx NodePort 10.109 .57.248 <none> 80 :31136 /TCP 35 s

3 部署HPA

创建pc-hpa.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: pc-hpa namespace: dev spec: minReplicas: 1 maxReplicas: 10 targetCPUUtilizationPercentage: 3 scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: nginx

1 2 3 4 5 6 7 8 [root @master 1.8 +] horizontalpodautoscaler.autoscaling/pc-hpa created [root @master 1.8 +] NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE pc-hpa Deployment/nginx 0 %/3 % 1 10 1 62 s

4 测试

使用压测工具对service地址192.168.109.100:31136进行压测,然后通过控制台查看hpa和pod的变化

hpa变化

1 2 3 4 5 6 7 8 9 10 11 [root @master ~] NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE pc-hpa Deployment/nginx 0 %/3 % 1 10 1 4 m11s pc-hpa Deployment/nginx 0 %/3 % 1 10 1 5 m19s pc-hpa Deployment/nginx 22 %/3 % 1 10 1 6 m50s pc-hpa Deployment/nginx 22 %/3 % 1 10 4 7 m5s pc-hpa Deployment/nginx 22 %/3 % 1 10 8 7 m21s pc-hpa Deployment/nginx 6 %/3 % 1 10 8 7 m51s pc-hpa Deployment/nginx 0 %/3 % 1 10 8 9 m6s pc-hpa Deployment/nginx 0 %/3 % 1 10 8 13 m pc-hpa Deployment/nginx 0 %/3 % 1 10 1 14 m

deployment变化

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root @master ~] NAME READY UP-TO -DATE AVAILABLE AGE nginx 1 /1 1 1 11 m nginx 1 /4 1 1 13 m nginx 1 /4 1 1 13 m nginx 1 /4 1 1 13 m nginx 1 /4 4 1 13 m nginx 1 /8 4 1 14 m nginx 1 /8 4 1 14 m nginx 1 /8 4 1 14 m nginx 1 /8 8 1 14 m nginx 2 /8 8 2 14 m nginx 3 /8 8 3 14 m nginx 4 /8 8 4 14 m nginx 5 /8 8 5 14 m nginx 6 /8 8 6 14 m nginx 7 /8 8 7 14 m nginx 8 /8 8 8 15 m nginx 8 /1 8 8 20 m nginx 8 /1 8 8 20 m nginx 1 /1 1 1 20 m

pod变化

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 [root @master ~] NAME READY STATUS RESTARTS AGE nginx-7df9756ccc -bh8dr 1 /1 Running 0 11 m nginx-7df9756ccc -cpgrv 0 /1 Pending 0 0 s nginx-7df9756ccc -8zhwk 0 /1 Pending 0 0 s nginx-7df9756ccc -rr9bn 0 /1 Pending 0 0 s nginx-7df9756ccc -cpgrv 0 /1 ContainerCreating 0 0 s nginx-7df9756ccc -8zhwk 0 /1 ContainerCreating 0 0 s nginx-7df9756ccc -rr9bn 0 /1 ContainerCreating 0 0 s nginx-7df9756ccc -m9gsj 0 /1 Pending 0 0 s nginx-7df9756ccc -g56qb 0 /1 Pending 0 0 s nginx-7df9756ccc -sl9c6 0 /1 Pending 0 0 s nginx-7df9756ccc -fgst7 0 /1 Pending 0 0 s nginx-7df9756ccc -g56qb 0 /1 ContainerCreating 0 0 s nginx-7df9756ccc -m9gsj 0 /1 ContainerCreating 0 0 s nginx-7df9756ccc -sl9c6 0 /1 ContainerCreating 0 0 s nginx-7df9756ccc -fgst7 0 /1 ContainerCreating 0 0 s nginx-7df9756ccc -8zhwk 1 /1 Running 0 19 s nginx-7df9756ccc -rr9bn 1 /1 Running 0 30 s nginx-7df9756ccc -m9gsj 1 /1 Running 0 21 s nginx-7df9756ccc -cpgrv 1 /1 Running 0 47 s nginx-7df9756ccc -sl9c6 1 /1 Running 0 33 s nginx-7df9756ccc -g56qb 1 /1 Running 0 48 s nginx-7df9756ccc -fgst7 1 /1 Running 0 66 s nginx-7df9756ccc -fgst7 1 /1 Terminating 0 6 m50s nginx-7df9756ccc -8zhwk 1 /1 Terminating 0 7 m5s nginx-7df9756ccc -cpgrv 1 /1 Terminating 0 7 m5s nginx-7df9756ccc -g56qb 1 /1 Terminating 0 6 m50s nginx-7df9756ccc -rr9bn 1 /1 Terminating 0 7 m5s nginx-7df9756ccc -m9gsj 1 /1 Terminating 0 6 m50s nginx-7df9756ccc -sl9c6 1 /1 Terminating 0 6 m50s

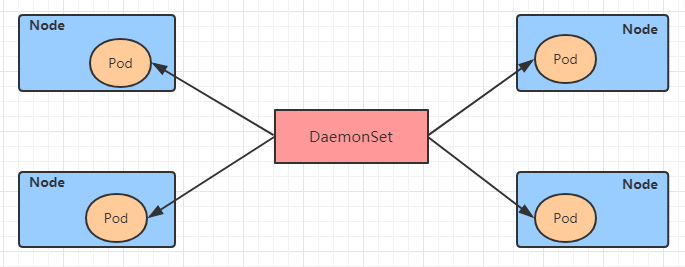

DaemonSet(DS) DaemonSet类型的控制器可以保证在集群中的每一台(或指定)节点上都运行一个副本。一般适用于日志收集、节点监控等场景。也就是说,如果一个Pod提供的功能是节点级别的(每个节点都需要且只需要一个),那么这类Pod就适合使用DaemonSet类型的控制器创建。

DaemonSet控制器的特点:

每当向集群中添加一个节点时,指定的 Pod 副本也将添加到该节点上

当节点从集群中移除时,Pod 也就被垃圾回收了

下面先来看下DaemonSet的资源清单文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 apiVersion: apps/v1 kind: DaemonSet metadata: name: namespace: labels: controller: daemonset spec: revisionHistoryLimit: 3 updateStrategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: app: nginx-pod matchExpressions: - {key: app , operator: In , values: [nginx-pod ]} template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1 ports: - containerPort: 80

创建pc-daemonset.yaml,内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 apiVersion: apps/v1 kind: DaemonSet metadata: name: pc-daemonset namespace: dev spec: selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root @master ~] daemonset.apps/pc-daemonset created [root @master ~] NAME DESIRED CURRENT READY UP-TO -DATE AVAILABLE AGE CONTAINERS IMAGES pc-daemonset 2 2 2 2 2 24 s nginx nginx:1.17 .1 [root @master ~] NAME READY STATUS RESTARTS AGE IP NODE pc-daemonset -9bck8 1 /1 Running 0 37 s 10.244 .1.43 node1 pc-daemonset -k224w 1 /1 Running 0 37 s 10.244 .2.74 node2 [root @master ~] daemonset.apps "pc-daemonset" deleted

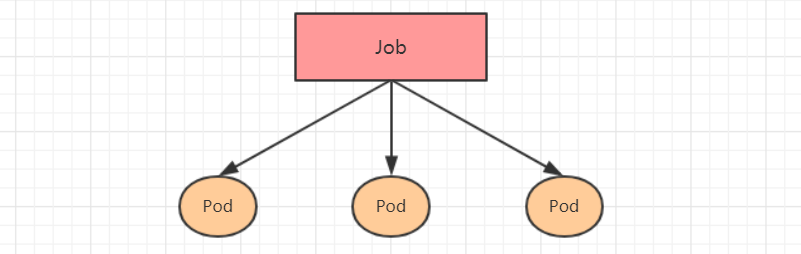

Job Job,主要用于负责**批量处理(一次要处理指定数量任务)短暂的 一次性(每个任务仅运行一次就结束)**任务。Job特点如下:

当Job创建的pod执行成功结束时,Job将记录成功结束的pod数量

当成功结束的pod达到指定的数量时,Job将完成执行

Job的资源清单文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 apiVersion: batch/v1 kind: Job metadata: name: namespace: labels: controller: job spec: completions: 1 parallelism: 1 activeDeadlineSeconds: 30 backoffLimit: 6 manualSelector: true selector: matchLabels: app: counter-pod matchExpressions: - {key: app , operator: In , values: [counter-pod ]} template: metadata: labels: app: counter-pod spec: restartPolicy: Never containers: - name: counter image: busybox:1.30 command: ["bin/sh" ,"-c" ,"for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 2;done" ]

1 2 3 4 关于重启策略设置的说明: 如果指定为OnFailure,则job会在pod出现故障时重启容器,而不是创建pod,failed次数不变 如果指定为Never,则job会在pod出现故障时创建新的pod,并且故障pod不会消失,也不会重启,failed次数加1 如果指定为Always的话,就意味着一直重启,意味着job任务会重复去执行了,当然不对,所以不能设置为Always

创建pc-job.yaml,内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: batch/v1 kind: Job metadata: name: pc-job namespace: dev spec: manualSelector: true selector: matchLabels: app: counter-pod template: metadata: labels: app: counter-pod spec: restartPolicy: Never containers: - name: counter image: busybox:1.30 command: ["bin/sh" ,"-c" ,"for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 3;done" ]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 [root @master ~] job.batch/pc-job created [root @master ~] NAME COMPLETIONS DURATION AGE CONTAINERS IMAGES SELECTOR pc-job 0 /1 21 s 21 s counter busybox:1.30 app=counter-pod pc-job 1 /1 31 s 79 s counter busybox:1.30 app=counter-pod [root @master ~] NAME READY STATUS RESTARTS AGE pc-job -rxg96 1 /1 Running 0 29 s pc-job -rxg96 0 /1 Completed 0 33 s [root @master ~] NAME READY STATUS RESTARTS AGE pc-job -684ft 1 /1 Running 0 5 s pc-job -jhj49 1 /1 Running 0 5 s pc-job -pfcvh 1 /1 Running 0 5 s pc-job -684ft 0 /1 Completed 0 11 s pc-job -v7rhr 0 /1 Pending 0 0 s pc-job -v7rhr 0 /1 Pending 0 0 s pc-job -v7rhr 0 /1 ContainerCreating 0 0 s pc-job -jhj49 0 /1 Completed 0 11 s pc-job -fhwf7 0 /1 Pending 0 0 s pc-job -fhwf7 0 /1 Pending 0 0 s pc-job -pfcvh 0 /1 Completed 0 11 s pc-job -5vg2j 0 /1 Pending 0 0 s pc-job -fhwf7 0 /1 ContainerCreating 0 0 s pc-job -5vg2j 0 /1 Pending 0 0 s pc-job -5vg2j 0 /1 ContainerCreating 0 0 s pc-job -fhwf7 1 /1 Running 0 2 s pc-job -v7rhr 1 /1 Running 0 2 s pc-job -5vg2j 1 /1 Running 0 3 s pc-job -fhwf7 0 /1 Completed 0 12 s pc-job -v7rhr 0 /1 Completed 0 12 s pc-job -5vg2j 0 /1 Completed 0 12 s [root @master ~] job.batch "pc-job" deleted

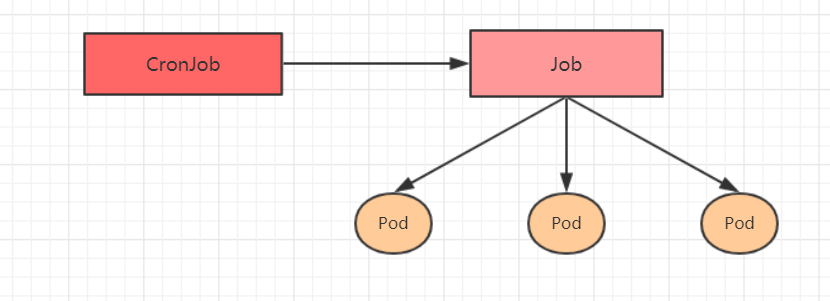

CronJob(CJ) CronJob控制器以Job控制器资源为其管控对象,并借助它管理pod资源对象,Job控制器定义的作业任务在其控制器资源创建之后便会立即执行,但CronJob可以以类似于Linux操作系统的周期性任务作业计划的方式控制其运行时间点 及重复运行 的方式。也就是说,CronJob可以在特定的时间点(反复的)去运行job任务 。

CronJob的资源清单文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 apiVersion: batch/v1beta1 kind: CronJob metadata: name: namespace: labels: controller: cronjob spec: schedule: concurrencyPolicy: failedJobHistoryLimit: successfulJobHistoryLimit: startingDeadlineSeconds: jobTemplate: metadata: spec: completions: 1 parallelism: 1 activeDeadlineSeconds: 30 backoffLimit: 6 manualSelector: true selector: matchLabels: app: counter-pod matchExpressions: 规则 - {key: app , operator: In , values: [counter-pod ]} template: metadata: labels: app: counter-pod spec: restartPolicy: Never containers: - name: counter image: busybox:1.30 command: ["bin/sh" ,"-c" ,"for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 20;done" ]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 需要重点解释的几个选项: schedule: cron表达式,用于指定任务的执行时间 */1 * * * * <分钟> <小时> <日> <月份> <星期> 分钟 值从 0 到 59. 小时 值从 0 到 23. 日 值从 1 到 31. 月 值从 1 到 12. 星期 值从 0 到 6, 0 代表星期日 多个时间可以用逗号隔开; 范围可以用连字符给出;*可以作为通配符; /表示每... concurrencyPolicy: Allow: 允许Jobs并发运行(默认) Forbid: 禁止并发运行,如果上一次运行尚未完成,则跳过下一次运行 Replace: 替换,取消当前正在运行的作业并用新作业替换它

创建pc-cronjob.yaml,内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: batch/v1beta1 kind: CronJob metadata: name: pc-cronjob namespace: dev labels: controller: cronjob spec: schedule: "*/1 * * * *" jobTemplate: metadata: spec: template: spec: restartPolicy: Never containers: - name: counter image: busybox:1.30 command: ["bin/sh" ,"-c" ,"for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 3;done" ]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [root @master ~] cronjob.batch/pc-cronjob created [root @master ~] NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE pc-cronjob */1 * * * * False 0 <none> 6 s [root @master ~] NAME COMPLETIONS DURATION AGE pc-cronjob -1592587800 1 /1 28 s 3 m26s pc-cronjob -1592587860 1 /1 28 s 2 m26s pc-cronjob -1592587920 1 /1 28 s 86 s [root @master ~] pc-cronjob -1592587800 -x4tsm 0 /1 Completed 0 2 m24s pc-cronjob -1592587860 -r5gv4 0 /1 Completed 0 84 s pc-cronjob -1592587920 -9dxxq 1 /1 Running 0 24 s [root @master ~] cronjob.batch "pc-cronjob" deleted

第七章 Service详解 本章节主要介绍kubernetes的流量负载组件:Service和Ingress。

Service介绍 在kubernetes中,pod是应用程序的载体,我们可以通过pod的ip来访问应用程序,但是pod的ip地址不是固定的,这也就意味着不方便直接采用pod的ip对服务进行访问。

为了解决这个问题,kubernetes提供了Service资源,Service会对提供同一个服务的多个pod进行聚合,并且提供一个统一的入口地址。通过访问Service的入口地址就能访问到后面的pod服务。

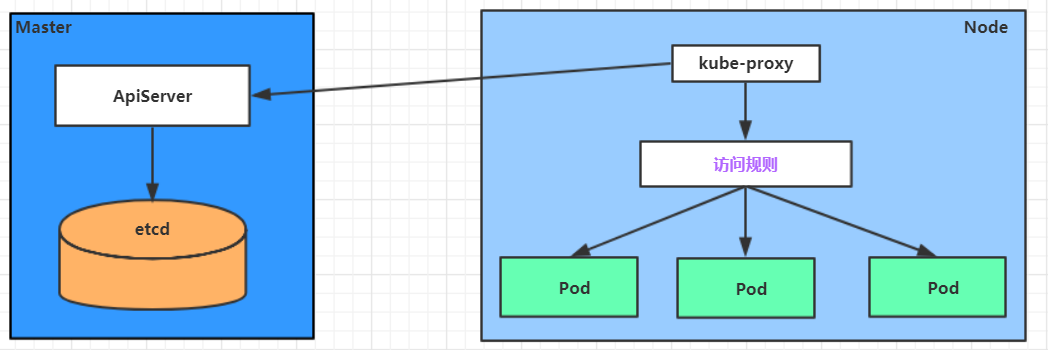

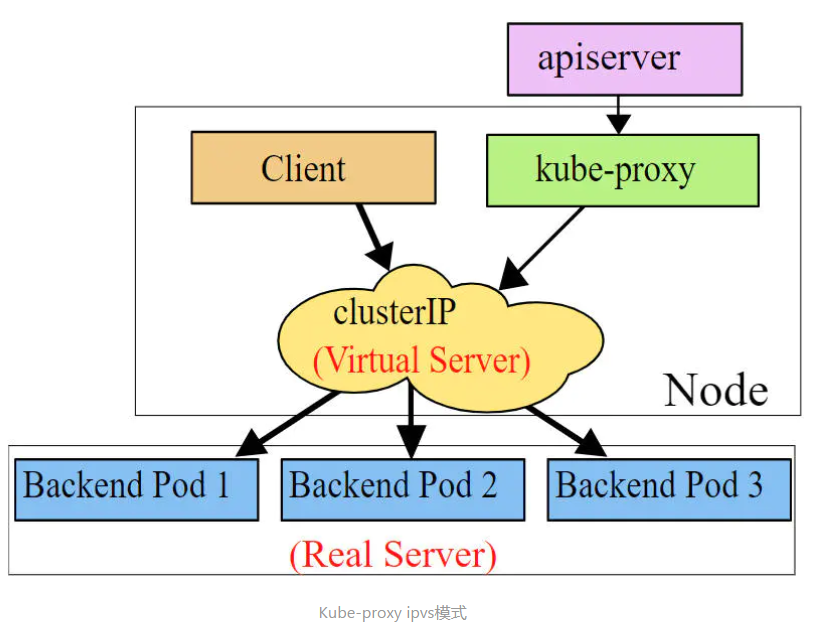

Service在很多情况下只是一个概念,真正起作用的其实是kube-proxy服务进程,每个Node节点上都运行着一个kube-proxy服务进程。当创建Service的时候会通过api-server向etcd写入创建的service的信息,而kube-proxy会基于监听的机制发现这种Service的变动,然后它会将最新的Service信息转换成对应的访问规则 。

1 2 3 4 5 6 7 8 9 10 11 12 [root @node1 ~] IP Virtual Server version 1.2 .1 (size=4096 ) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.97 .97.97 :80 rr -> 10.244 .1.39 :80 Masq 1 0 0 -> 10.244 .1.40 :80 Masq 1 0 0 -> 10.244 .2.33 :80 Masq 1 0 0

kube-proxy目前支持三种工作模式:

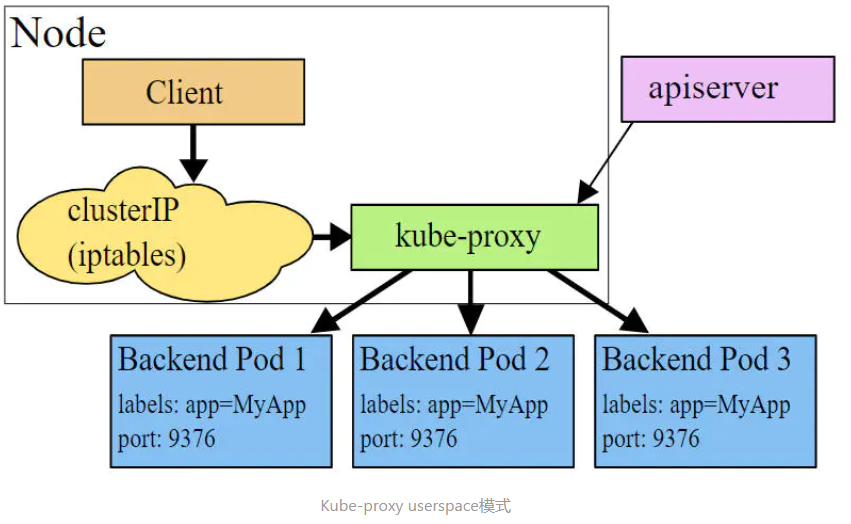

userspace 模式

userspace模式下,kube-proxy会为每一个Service创建一个监听端口,发向Cluster IP的请求被Iptables规则重定向到kube-proxy监听的端口上,kube-proxy根据LB算法选择一个提供服务的Pod并和其建立链接,以将请求转发到Pod上。

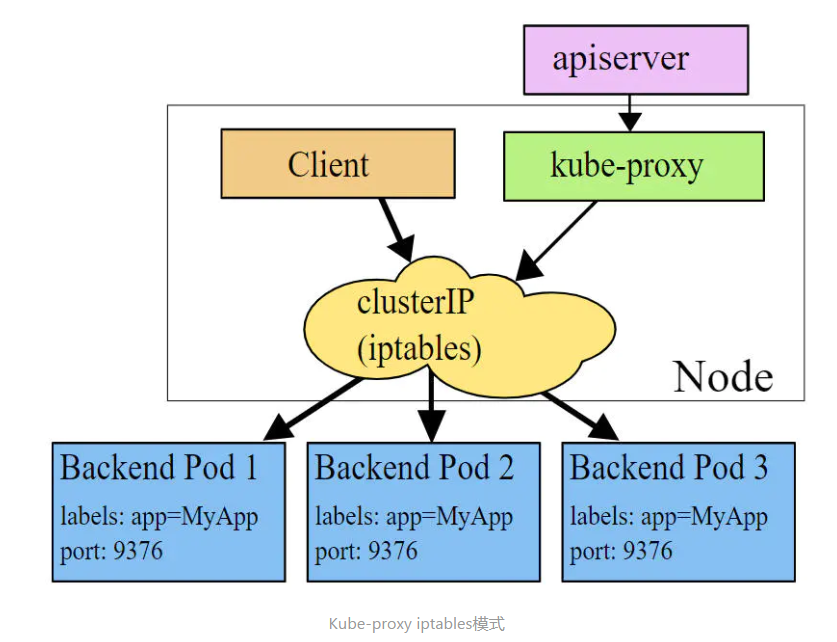

iptables 模式

iptables模式下,kube-proxy为service后端的每个Pod创建对应的iptables规则,直接将发向Cluster IP的请求重定向到一个Pod IP。

ipvs 模式

ipvs模式和iptables类似,kube-proxy监控Pod的变化并创建相应的ipvs规则。ipvs相对iptables转发效率更高。除此以外,ipvs支持更多的LB算法。

1 2 3 4 5 6 7 8 9 10 11 12 [root @master ~] [root @master ~] [root @node1 ~] IP Virtual Server version 1.2 .1 (size=4096 ) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.97 .97.97 :80 rr -> 10.244 .1.39 :80 Masq 1 0 0 -> 10.244 .1.40 :80 Masq 1 0 0 -> 10.244 .2.33 :80 Masq 1 0 0

Service类型 Service的资源清单文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 kind: Service apiVersion: v1 metadata: name: service namespace: dev spec: selector: app: nginx type: clusterIP: sessionAffinity: ports: - protocol: TCP port: 3017 targetPort: 5003 nodePort: 31122

ClusterIP:默认值,它是Kubernetes系统自动分配的虚拟IP,只能在集群内部访问

NodePort:将Service通过指定的Node上的端口暴露给外部,通过此方法,就可以在集群外部访问服务

LoadBalancer:使用外接负载均衡器完成到服务的负载分发,注意此模式需要外部云环境支持

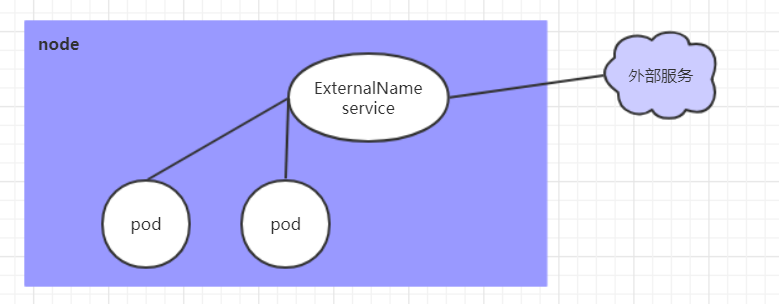

ExternalName: 把集群外部的服务引入集群内部,直接使用

Service使用 实验环境准备 在使用service之前,首先利用Deployment创建出3个pod,注意要为pod设置app=nginx-pod的标签

创建deployment.yaml,内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: apps/v1 kind: Deployment metadata: name: pc-deployment namespace: dev spec: replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1 ports: - containerPort: 80

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root @master ~] deployment.apps/pc-deployment created [root @master ~] NAME READY STATUS IP NODE LABELS pc-deployment -66cb59b984 -8p84h 1 /1 Running 10.244 .1.40 node1 app=nginx-pod pc-deployment -66cb59b984 -vx8vx 1 /1 Running 10.244 .2.33 node2 app=nginx-pod pc-deployment -66cb59b984 -wnncx 1 /1 Running 10.244 .1.39 node1 app=nginx-pod [root @master ~] 10.244 .1.40 [root @master ~] 10.244 .2.33 [root @master ~] 10.244 .1.39

ClusterIP类型的Service 创建service-clusterip.yaml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: v1 kind: Service metadata: name: service-clusterip namespace: dev spec: selector: app: nginx-pod clusterIP: 10.97 .97 .97 type: ClusterIP ports: - port: 80 targetPort: 80

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 [root @master ~] service/service-clusterip created [root @master ~] NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service-clusterip ClusterIP 10.97 .97.97 <none> 80 /TCP 13 s app=nginx-pod [root @master ~] Name: service-clusterip Namespace: dev Labels: <none> Annotations: <none> Selector: app=nginx-pod Type : ClusterIPIP: 10.97 .97.97 Port: <unset> 80 /TCP TargetPort: 80 /TCP Endpoints: 10.244 .1.39 :80 ,10.244 .1.40 :80 ,10.244 .2.33 :80 Session Affinity: None Events: <none> [root @master ~] TCP 10.97 .97.97 :80 rr -> 10.244 .1.39 :80 Masq 1 0 0 -> 10.244 .1.40 :80 Masq 1 0 0 -> 10.244 .2.33 :80 Masq 1 0 0 [root @master ~] 10.244 .2.33

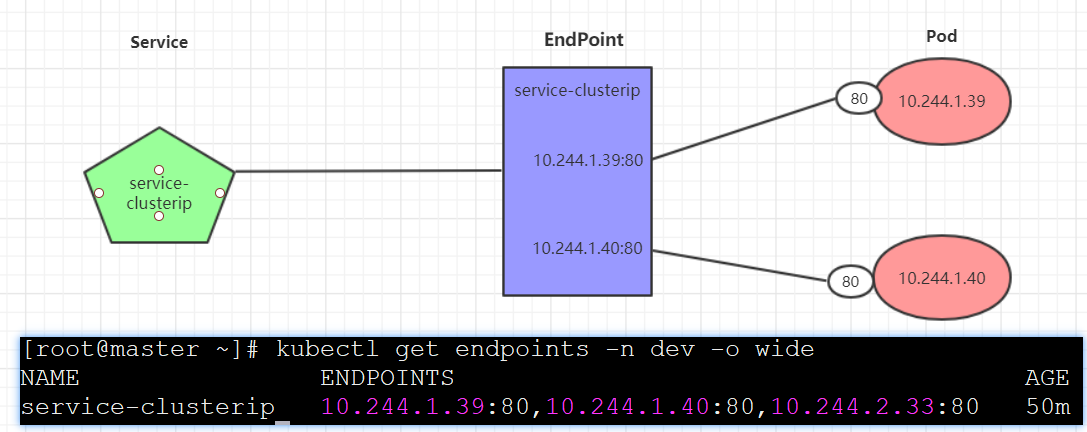

Endpoint

Endpoint是kubernetes中的一个资源对象,存储在etcd中,用来记录一个service对应的所有pod的访问地址,它是根据service配置文件中selector描述产生的。

一个Service由一组Pod组成,这些Pod通过Endpoints暴露出来,Endpoints是实现实际服务的端点集合 。换句话说,service和pod之间的联系是通过endpoints实现的。

负载分发策略

对Service的访问被分发到了后端的Pod上去,目前kubernetes提供了两种负载分发策略:

如果不定义,默认使用kube-proxy的策略,比如随机、轮询

基于客户端地址的会话保持模式,即来自同一个客户端发起的所有请求都会转发到固定的一个Pod上

此模式可以使在spec中添加sessionAffinity:ClientIP选项

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 [root @master ~] TCP 10.97 .97.97 :80 rr -> 10.244 .1.39 :80 Masq 1 0 0 -> 10.244 .1.40 :80 Masq 1 0 0 -> 10.244 .2.33 :80 Masq 1 0 0 [root @master ~] 10.244 .1.40 10.244 .1.39 10.244 .2.33 10.244 .1.40 10.244 .1.39 10.244 .2.33 [root @master ~] TCP 10.97 .97.97 :80 rr persistent 10800 -> 10.244 .1.39 :80 Masq 1 0 0 -> 10.244 .1.40 :80 Masq 1 0 0 -> 10.244 .2.33 :80 Masq 1 0 0 [root @master ~] 10.244 .2.33 10.244 .2.33 10.244 .2.33 [root @master ~] service "service-clusterip" deleted

Headless 类型的Service 在某些场景中,开发人员可能不想使用Service提供的负载均衡功能,而希望自己来控制负载均衡策略,针对这种情况,kubernetes提供了Headless Service,这类Service不会分配Cluster IP,如果想要访问service,只能通过service的域名进行查询。

域名 = service_name .namespace .svc.cluster.local (svc.cluster.local是默认的)

创建service-headless.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: v1 kind: Service metadata: name: service-headless namespace: dev spec: selector: app: nginx-pod clusterIP: None type: ClusterIP ports: - port: 80 targetPort: 80

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 [root @master ~] service/service-headless created [root @master ~] NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service-headless ClusterIP None <none> 80 /TCP 11 s app=nginx-pod [root @master ~] Name: service-headless Namespace: dev Labels: <none> Annotations: <none> Selector: app=nginx-pod Type : ClusterIPIP: None Port: <unset> 80 /TCP TargetPort: 80 /TCP Endpoints: 10.244 .1.39 :80 ,10.244 .1.40 :80 ,10.244 .2.33 :80 Session Affinity: None Events: <none> [root @master ~] / nameserver 10.96 .0.10 search dev.svc.cluster.local svc.cluster.local cluster.local [root @master ~] service-headless .dev.svc.cluster.local. 30 IN A 10.244 .1.40 service-headless .dev.svc.cluster.local. 30 IN A 10.244 .1.39 service-headless .dev.svc.cluster.local. 30 IN A 10.244 .2.33

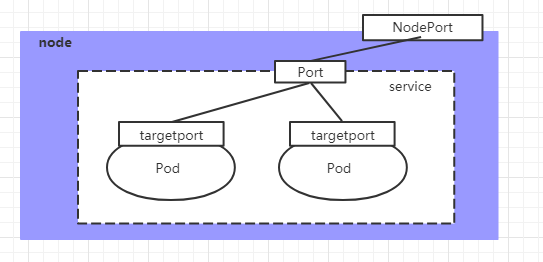

NodePort类型的Service 在之前的样例中,创建的Service的ip地址只有集群内部才可以访问(即master和worker node上可以服务,外部服务器无法访问),如果希望将Service暴露给集群外部使用,那么就要使用到另外一种类型的Service,称为NodePort类型。NodePort的工作原理其实就是将service的端口映射到Node的一个端口上 ,然后就可以通过NodeIp:NodePort来访问service了。

创建service-nodeport.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: v1 kind: Service metadata: name: service-nodeport namespace: dev spec: selector: app: nginx-pod type: NodePort ports: - port: 80 nodePort: 30002 targetPort: 80

1 2 3 4 5 6 7 8 9 10 [root @master ~] service/service-nodeport created [root @master ~] NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) SELECTOR service-nodeport NodePort 10.105 .64.191 <none> 80 :30002 /TCP app=nginx-pod

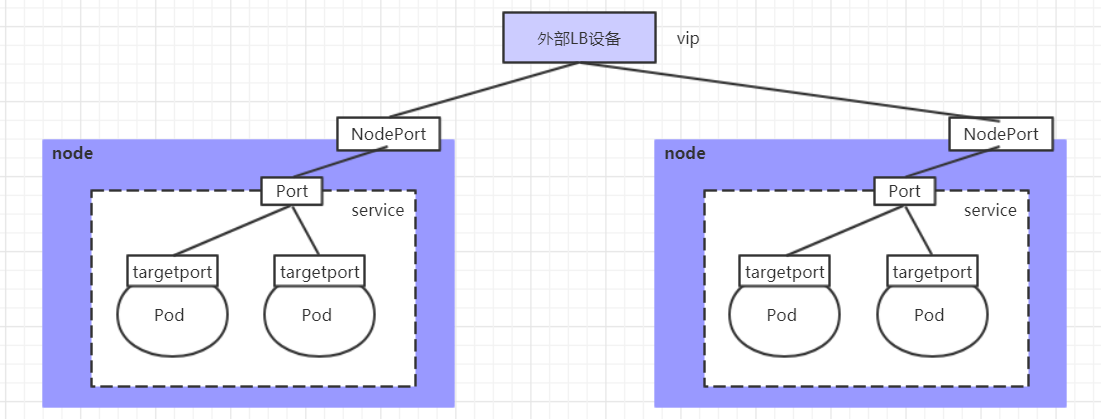

LoadBalancer类型的Service LoadBalancer和NodePort很相似,目的都是向外部暴露一个端口,区别在于LoadBalancer会在集群的外部再来做一个负载均衡设备,而这个设备需要外部环境支持的,外部服务发送到这个设备上的请求,会被设备负载之后转发到集群中。

ExternalName类型的Service ExternalName类型的Service用于引入集群外部的服务,它通过externalName属性指定外部一个服务的地址,然后在集群内部访问此service就可以访问到外部的服务了。

1 2 3 4 5 6 7 8 apiVersion: v1 kind: Service metadata: name: service-externalname namespace: dev spec: type: ExternalName externalName: www.baidu.com

1 2 3 4 5 6 7 8 9 10 [root @master ~] service/service-externalname created [root @master ~] service-externalname .dev.svc.cluster.local. 30 IN CNAME www.baidu.com. www.baidu.com. 30 IN CNAME www.a.shifen.com. www.a.shifen.com. 30 IN A 39.156 .66.18 www.a.shifen.com. 30 IN A 39.156 .66.14

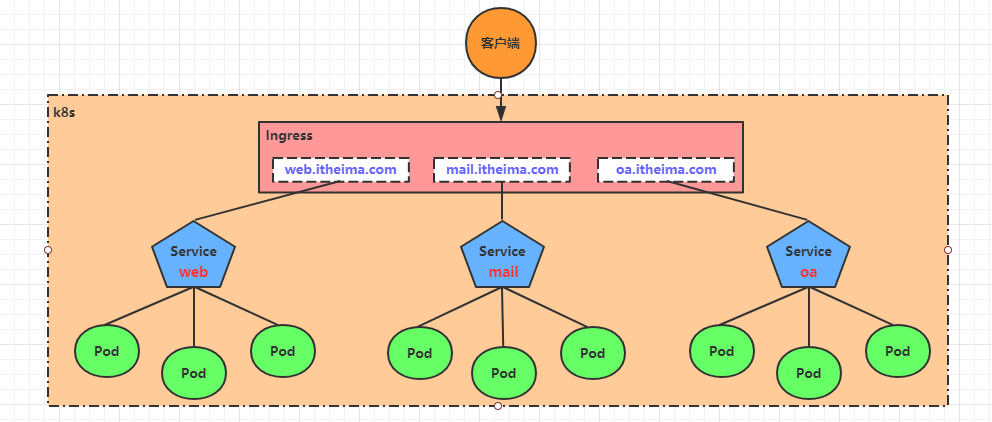

Ingress介绍 在前面课程中已经提到,Service对集群之外暴露服务的主要方式有两种:NotePort和LoadBalancer,但是这两种方式,都有一定的缺点:

NodePort方式的缺点是会占用很多集群机器的端口,那么当集群服务变多的时候,这个缺点就愈发明显

LB方式的缺点是每个service需要一个LB,浪费、麻烦,并且需要kubernetes之外设备的支持

基于这种现状,kubernetes提供了Ingress资源对象,Ingress只需要一个NodePort或者一个LB就可以满足暴露多个Service的需求。工作机制大致如下图表示:

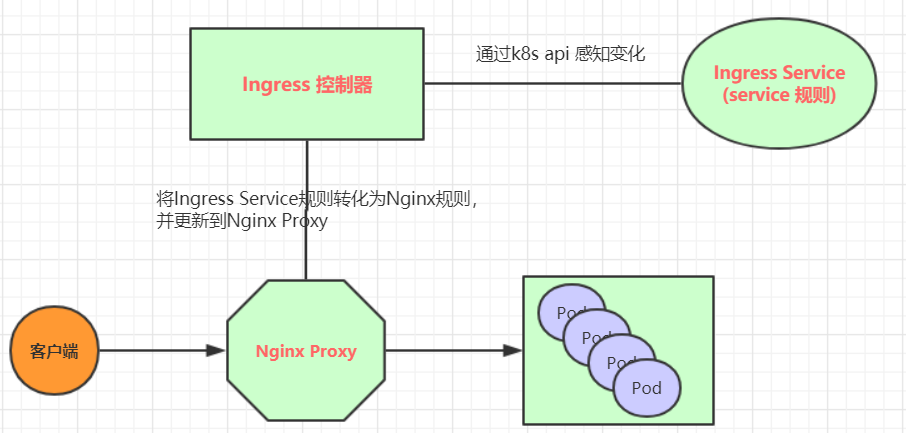

实际上,Ingress相当于一个7层的负载均衡器,是kubernetes对反向代理的一个抽象,它的工作原理类似于Nginx,可以理解成在Ingress里建立诸多映射规则,Ingress Controller通过监听这些配置规则并转化成Nginx的反向代理配置 , 然后对外部提供服务 。在这里有两个核心概念:

ingress:kubernetes中的一个对象,作用是定义请求如何转发到service的规则

ingress controller:具体实现反向代理及负载均衡的程序,对ingress定义的规则进行解析,根据配置的规则来实现请求转发,实现方式有很多,比如Nginx, Contour, Haproxy等等

Ingress(以Nginx为例)的工作原理如下:

用户编写Ingress规则,说明哪个域名对应kubernetes集群中的哪个Service

Ingress控制器动态感知Ingress服务规则的变化,然后生成一段对应的Nginx反向代理配置

Ingress控制器会将生成的Nginx配置写入到一个运行着的Nginx服务中,并动态更新

到此为止,其实真正在工作的就是一个Nginx了,内部配置了用户定义的请求转发规则

Ingress使用 环境准备 搭建ingress环境

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 [root @master ~] [root @master ~] [root @master ingress -controller ] [root @master ingress -controller ] [root @master ingress -controller ] [root @master ingress -controller ] NAME READY STATUS RESTARTS AGE pod/nginx-ingress -controller -fbf967dd5 -4qpbp 1 /1 Running 0 12 h [root @master ingress -controller ] NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx NodePort 10.98 .75.163 <none> 80 :32240 /TCP,443 :31335 /TCP 11 h

准备service和pod

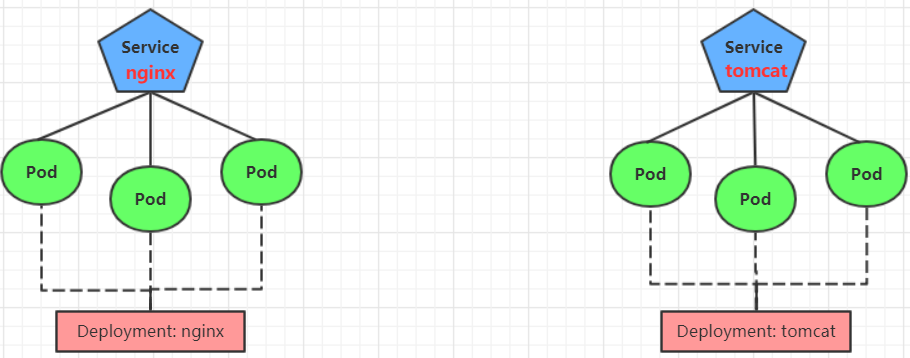

为了后面的实验比较方便,创建如下图所示的模型

创建tomcat-nginx.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment namespace: dev spec: replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx:1.17.1 ports: - containerPort: 80 --- apiVersion: apps/v1 kind: Deployment metadata: name: tomcat-deployment namespace: dev spec: replicas: 3 selector: matchLabels: app: tomcat-pod template: metadata: labels: app: tomcat-pod spec: containers: - name: tomcat image: tomcat:8.5-jre10-slim ports: - containerPort: 8080 --- apiVersion: v1 kind: Service metadata: name: nginx-service namespace: dev spec: selector: app: nginx-pod clusterIP: None type: ClusterIP ports: - port: 80 targetPort: 80 --- apiVersion: v1 kind: Service metadata: name: tomcat-service namespace: dev spec: selector: app: tomcat-pod clusterIP: None type: ClusterIP ports: - port: 8080 targetPort: 8080

1 2 3 4 5 6 7 8 [root @master ~] [root @master ~] NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-service ClusterIP None <none> 80 /TCP 48 s tomcat-service ClusterIP None <none> 8080 /TCP 48 s

Http代理 创建ingress-http.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-http namespace: dev spec: rules: - host: nginx.itheima.com http: paths: - path: / backend: serviceName: nginx-service servicePort: 80 - host: tomcat.itheima.com http: paths: - path: / backend: serviceName: tomcat-service servicePort: 8080

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root @master ~] ingress.extensions/ingress-http created [root @master ~] NAME HOSTS ADDRESS PORTS AGE ingress-http nginx.itheima.com,tomcat.itheima.com 80 22 s [root @master ~] ... Rules: Host Path Backends ---- ---- -------- nginx.itheima.com / nginx-service :80 (10.244 .1.96 :80 ,10.244 .1.97 :80 ,10.244 .2.112 :80 ) tomcat.itheima.com / tomcat-service :8080 (10.244 .1.94 :8080 ,10.244 .1.95 :8080 ,10.244 .2.111 :8080 ) ...

Https代理 创建证书

1 2 3 4 5 openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/C=CN/ST=BJ/L=BJ/O=nginx/CN=itheima.com" kubectl create secret tls tls-secret --key tls.key --cert tls.crt

创建ingress-https.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-https namespace: dev spec: tls: - hosts: - nginx.itheima.com - tomcat.itheima.com secretName: tls-secret rules: - host: nginx.itheima.com http: paths: - path: / backend: serviceName: nginx-service servicePort: 80 - host: tomcat.itheima.com http: paths: - path: / backend: serviceName: tomcat-service servicePort: 8080

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root @master ~] ingress.extensions/ingress-https created [root @master ~] NAME HOSTS ADDRESS PORTS AGE ingress-https nginx.itheima.com,tomcat.itheima.com 10.104 .184.38 80 , 443 2 m42s [root @master ~] ... TLS: tls-secret terminates nginx.itheima.com,tomcat.itheima.com Rules: Host Path Backends ---- ---- -------- nginx.itheima.com / nginx-service :80 (10.244 .1.97 :80 ,10.244 .1.98 :80 ,10.244 .2.119 :80 ) tomcat.itheima.com / tomcat-service :8080 (10.244 .1.99 :8080 ,10.244 .2.117 :8080 ,10.244 .2.120 :8080 ) ...